Hackathon 2025

AI Agents in Acumatica

Table of Contents

Chapter 1: The Crazy Idea… Followed by the Panic

Before we even set foot in the hackathon venue, our team was already a whirlwind of virtual meetings and brainstorming sessions. Six of them, to be precise. We wrestled with technical approaches and debated the merits of various solutions. One team member brought up a current problem in their company. Customers were emailing their orders and customer service had to manually enter them into Acumatica.

Can you imagine that? They receive emails from customers who, within four hours, arrive at the warehouse, expecting to collect their orders! Talk about pressure. Missing an email, or making a manual input mistake, is inevitable. And with up to 10 minutes spent creating each order in Acumatica – not to mention two-dozen clicks - it becomes obvious we had to evolve past manual processes.

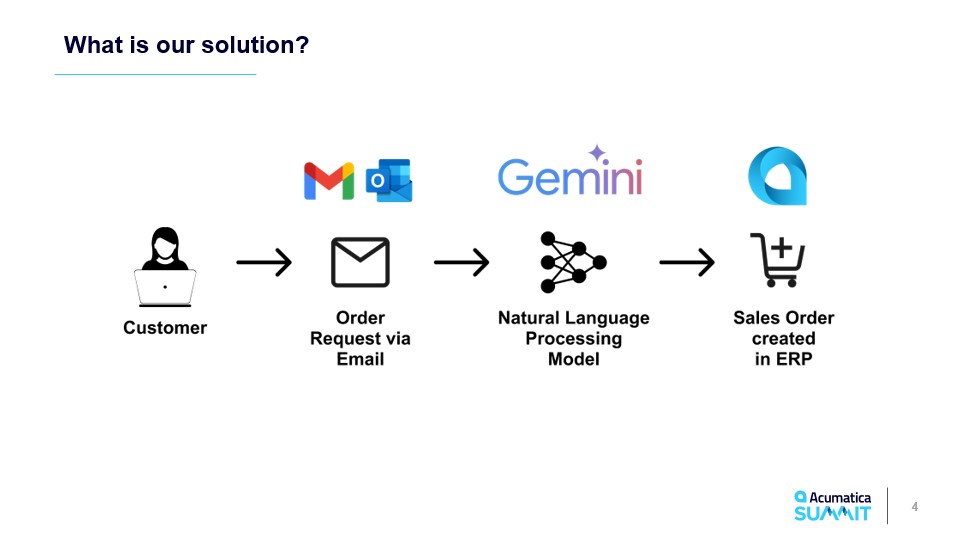

The challenge was clear: Transform incoming customer emails directly into Sales Orders inside of Acumatica, using the power of AI.

Now, I’ve dabbled in AI before, mostly recommendation systems and fine-tuning LLMs for fun (and profit?). The thought of weaving an AI agent directly into a business logic was… a bit daunting. Most companies treat AI as an external knowledge, some assistant, or a smart "overlay". The user performs tasks in the systems informed by AI. The real innovation is when the AI Agent acts inside the system. Your AIs are actually working for you - not just informing you.

So, when I volunteered my "AI expertise" a wave of panic washed over me! Imposter syndrome, anyone? I was ready to jump on the AI bull, and wrangle it to our advantage. But I had no idea what I was in for.

Chapter 2: Tedious Data Labeling: The Unsung Hero of AI (Or Is It Villain?)

Saturday morning at 11 am. We had 25 hours until presentations (minus the essentials: 8 hours sleep, shower, and you know, the important things)

We gathered at the venue, reviewed the architecture, and jumped right into prepping our training data. This is one of the least romantic things one can experience in building out a machine learning product... but it's completely essential to success. This is when we took a hard look at the emails we were using to train the model. While Lakshmi inputted the actual training data, Kulvir and I wrangled a very difficult shape that would actually work within Acumatica for creation of Sales Orders. It was truly a joint operation of painstaking, precise work.

Six long, mind-numbing hours later, I was done with my JSON structuring! By the time dinner rolled around, we had our dataset, and a renewed enthusiasm! (Mostly).

JSON, or JavaScript Object Notation, is a lightweight format that is often used to communicate data over the internet. Think of it as a universal language for APIs.

When we would pull emails via API we would receive a JSON object of the raw HTML text of the email (see example below). We trained our model to output a JSON object that would be put in the payload of a PUT API call that created a sales order.

Chapter 3: It Works! …With a 50% Error Rate

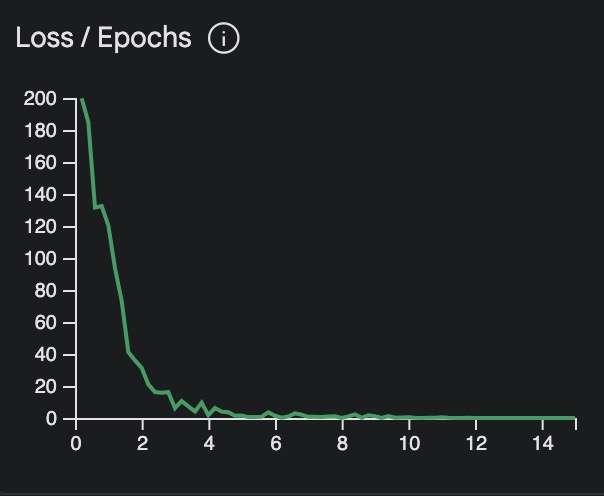

Saturday Dinner Time. 18 hours until presentation (or T-minus 10 hours if you minus our sleep, etc) We pushed forward and I started fine-tuning the language model. As the Loss function of our model "converged" – (fancy jargon meaning it started figuring things out) – I was euphoric!

At the 8th hour of programming I actually cheered "IT WORKS!" When fine-tuning a model... it isn't always obvious if things are actually "working" or not. Especially early on. Its difficult to measure. And once the process begins working... it is unlike any other programming. We are not simply making sequential commands. We have these creative "ah'ha" moments that are impossible to simply describe in words. It truly is different.

It was time to program the integrations - some plain, old, vanilla, Von Neumann machine coding.

The core of the integration needed a process which pulled in emails via Acumatica's API, extracted key data, and fed that to our freshly trained model. Then, the model's output was the payload for a PUT API call which ultimately creates sales orders.

We were ready to test. Success!! … or so we thought. It’s like that feeling when you disassemble and rebuild your car’s engine and end with “spare parts” (I may be speaking from personal experience here).

We committed a classic machine learning sin, we tested on our training data. Rookie move.

Our model was only hitting a 50% success rate... And then... all the air seemed to leave the room. The marketing team stared at me as if I'd grown a second head.

Are you serious? You want us to sell a 50% error rate?

Can you... like... try harder?

They were right, of course. We had a 50/50 chance of making the correct order? That is not the sort of gamble you give your customers.

Loss functions measure the "cost" of our model being incorrect. If a model is highly inaccurate the loss value will be high. If the loss is low the model is considered very accurate (in most situations). Machine Learning algorithms are about minimizing the loss function so it can become better and better with data.

Technical Details:

- Base Model: Gemini 1.5 Flash 001 Tuning

- Tuned Examples: 20 (real emails) + 20 (synthetic)

- Epochs: 13

- Batch Size: 4

- Learning Rate: 0.001

Chapter 4: Synthetic Data

Saturday 9pm. 15 hours till presentation (aka 7 hours of productive time).

It was time to pull some rabbits out of our hats. It's important in AI to train on the proper data, but you can't win a hackathon without some hacking. To address our 50% failure rate, we used our trusty LLM to generate 20 synthetic emails. We asked our model to duplicate the style of our current emails, and for "random emails" that may not be connected to a sale order. One email, for example, simply requested "a gallon of milk".

Yes, data-labeling is back. But, for the last time! (probably) We tagged all new "synthetic" data with "GUEST" for unknown customers and "NOTFOUND" for unrecognized inventory items.

By closing time, we had over 90% success rate! 🎉

One of our teammates asked "why we would even consider manually data entry any more?" If this can be done in 20 hours of work, why would we ever go back?

Chapter 5: Present and Win

Sunday morning. 4 hours left for the presentation!

It was all hands on deck to finalize the presentation. I made some tweaks to the LLM, just in case it forgot everything overnight, and tried to polish off a few rough edges. (mostly trying not to sweat)

When it came time to present, Brian masterfully set the stage with humor and a skit. I dove in for the demo of our Narrow AI Agent.

One judge challenged us. "Can your AI do the same with Shipment emails?"

"No. But, want to know our secret? It only took 20 emails! If I got my hands on 20 emails of shipment requests it would do the same".

All of the other projects were so incredible. And our model could simply not do as much as we wanted it to do. It truly shows some of the brilliant innovation out there. Our team joked that we should have just called ourselves, "Narrow AI".

AI is rapidly evolving as it goes from outside the application to inside the application.

A hot topic is Large Models vs Small Models.

Large models (such as ChatGPT) have hundreds of billions of parameters that allow it to act with PHD level intelligence (so the pitch goes). These models do well in math, coding, and science when there is a definitive answer. Many of the other Hackathon teams used large models with prompt engineering to solve their problems. Inevitably, there is a risk of hallucinations and compute costs are high.

Where there is not a clear answer the models can halucinate - aka, make stuff up.

Small models on the other hand are trained for a specific task. There is a definitive output.

Imagine you have an army of narrow AI agents that solve narrowly defined problems. This way, systems are built like lego bricks. The combination of specialized tools working together grow into a symphony that produced powerful results.

And it should be no surprise we were honored, shocked and thankful that our Narrow AI solution took 1st place.

If I Were to Build the Solution Again: Future Improvements

We accomplished a lot in very little time, but there is always room to grow. Here are the ideas swirling around in my head:

- More Data: Always!

- At the first step of the workflow, AI agent should classify an email as "Sales Order", "Quote", or "Shipment". It would make space for us to process more specialized actions

- JSON was a mistake. If we are to build an army of narrow AI agents we need to pass simple business information between them.

- RAG (retrieval augmented generation). Our solution could leverage an additional AI model to look through our database. And instead of asking the model in our prompt... "If unsure of this information return GUEST or NOTFOUND"... we would ask the database model, "Find a valid customer number in the database with an email similar to X".

Retrieval-augmented generation (RAG) is a technique that brings external knowledge sources into the AI model. In short a model needs a source of data to reason upon. Using a technique of retrieval and having your agent query the database you are giving your narrow AI agent real time info on your product offering, services, business customers or anything else that can be stored in your company database.

Final Thoughts

This hackathon was an absolute rollercoaster. I walked away feeling grateful that the team and I solved all the challenges that were put before us. We've proven a concept and shown that implementing an AI agent directly in the business logic layer of Acumatica is both possible and a fantastic avenue of optimization for all organizations.

We took what seemed like a "black box" and have given it a set of predictable and replicable tools that make narrow ai models impactful to any business. And in the end we have a tool to solve real problems.

So here's the question... why wouldn't you implement a narrow AI agent to start to handle those annoying time-consuming data tasks in your office? I will leave that for you to ponder over. And, of course, dont hesitate to reach out if you have your own Narrow AI dreams you want to chat about. 😉